Why behind AI: Kimi starts thinking

Moonshot AI is one of the most visible Chinese frontier labs to western audiences and is predominantly known for Kimi K2. In my introduction to Alibaba Cloud I wrote about the overall model landscape in China:

Does being behind the frontier labs matter for their core market in China? So far, their edge is both in terms of compute as well as multi-lingual capabilities and a strong focus on coding. These make the Qwen family of models useful across a variety of applications. The DeepSeek team seems more focused on research and experimentation (suitable for their background in physics and finance), while the new players like GLM and Kimi K2 are, well, literally funded by Alibaba. Other big players in China are also moving in (Baidu, Tencent, and Huawei), but so far their performance seems to mimic that of Apple's and Microsoft's homegrown efforts in terms of limited impact.

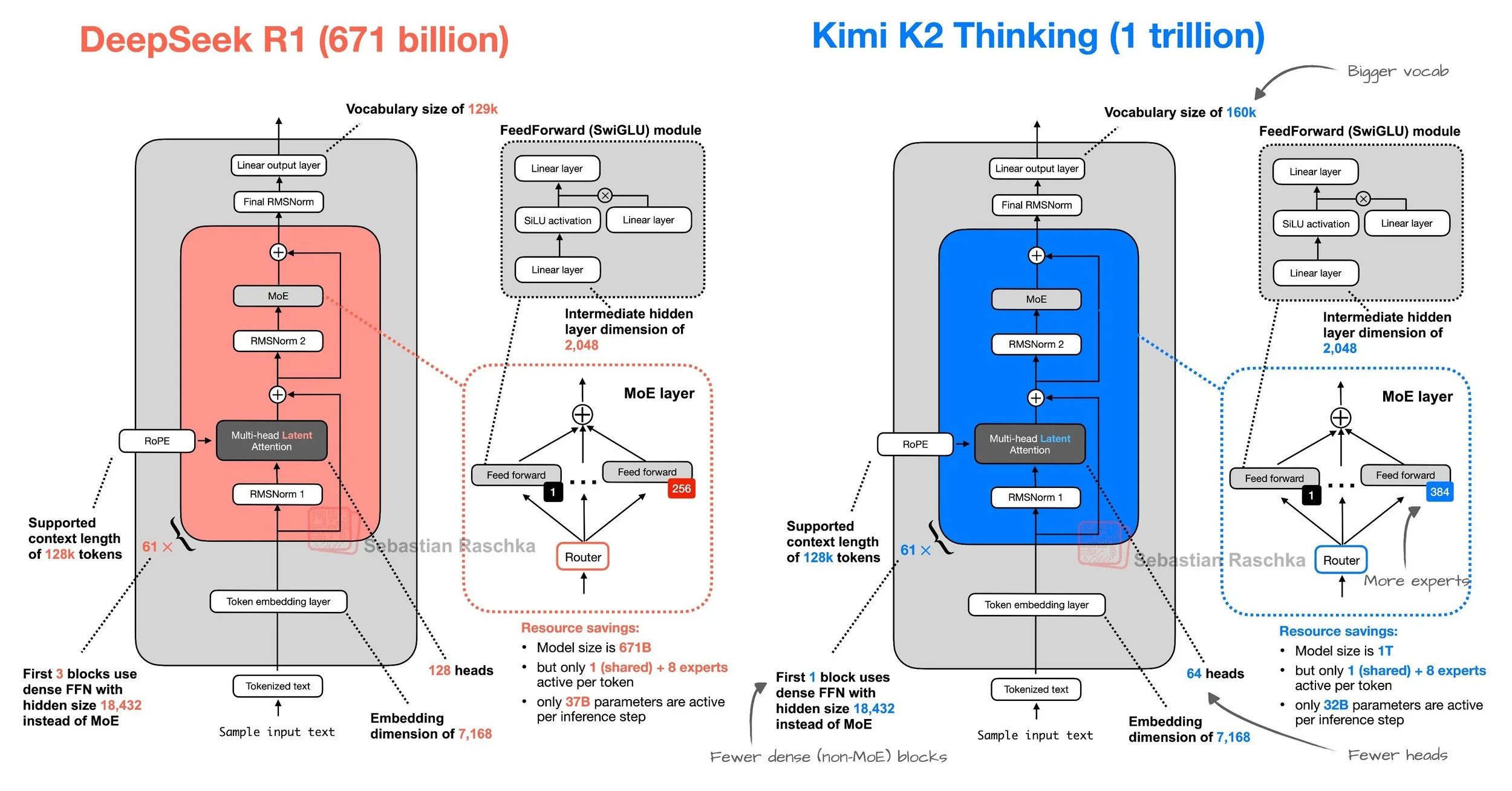

Kimi K2 has gained a lot of traction in the last year partly because the company has been focused on delivering a usable chat experience, with a focus on strong writing skills that are much closer to what you would expect from a US-based lab like Anthropic. Kimi K2 is also known for being one of the main MoE models in the market (Mixture of Experts, which basically means it will typically find relevant information quickly since it's tied to a specific 32B part of the neural network and can be quite efficient to run as a self-hosted open source model). An AI researcher compared the architecture to DeepSeek R1, but scaled further.

Source: @rasbt on X

Kimi K2 Thinking is the first time that they've released a reasoning model and the results are…interesting to say the least.

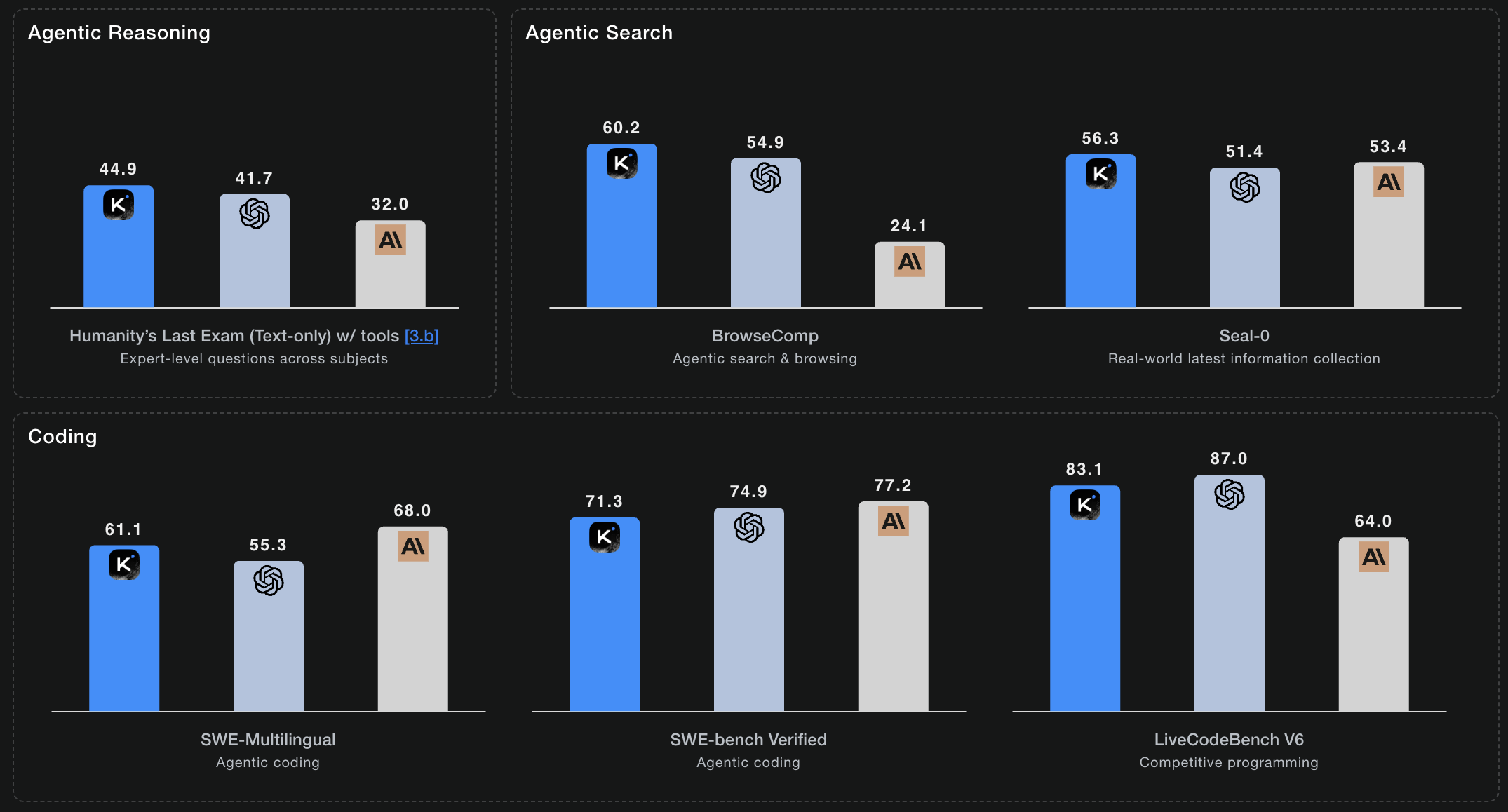

Source: Kimi K2 Thinking announcement post

K2 Thinking demonstrates outstanding reasoning and problem-solving abilities. On Humanity’s Last Exam (HLE)—a rigorously crafted, closed‑ended benchmark—spanning thousands of expert‑level questions across more than 100 subjects, K2 Thinking achieved a state-of-the-art score of 44.9%, with search, python, and web-browsing tools, establishing new records in multi‑domain expert‑level reasoning performance.

By reasoning while actively using a diverse set of tools, K2 Thinking is capable of planning, reasoning, executing, and adapting across hundreds of steps to tackle some of the most challenging academic and analytical problems. In one instance, it successfully solved a PhD-level mathematics problem through 23 interleaved reasoning and tool calls, exemplifying its capacity for deep, structured reasoning and long-horizon problem solving.

Humanity's Last Exam is a well-respected benchmark focusing on 3,000 graduate-level questions spanning more than 100 academic disciplines, designed specifically to test deep reasoning and domain expertise rather than simple recall.

Source: Kimi K2 Thinking announcement post

What makes these results very interesting is that when using significantly more compute than usual in "heavy mode", they are able to beat Grok's result, as well as remain significantly ahead of GPT5 Pro. xAI had put a lot of stock in claiming their HLE result with Grok Heavy as clearly demonstrating it's the "smartest scientific model in the world", yet they can no longer hold that title.

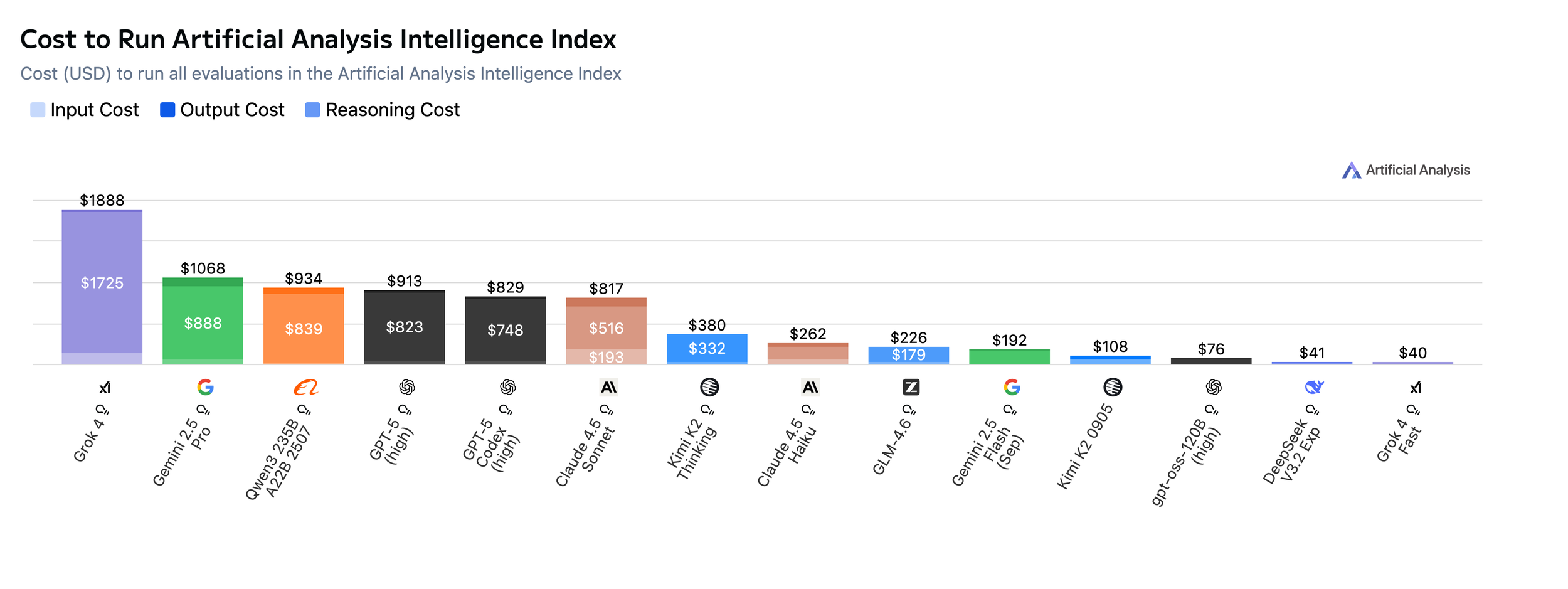

Source: Artificial Analysis

They achieve this by running a ridiculous amount of reasoning steps, consuming the largest amount of tokens that any model has used so far on the Artificial Analysis benchmarks.

Source: Artificial Analysis

On paper, this is compensated by having quite aggressive pricing, which leads to a rather reasonable total cost to run the benchmarks at $380. For comparison, the same benchmark with Grok 4 ends up at $1,888 and it's unclear what the "heavy" model comparisons would look like, but Grok 4 Heavy is around 10 times more expensive per token (although it could potentially solve the benchmark tasks faster and with fewer total tokens). In practice, things depend on who is actually hosting the model.

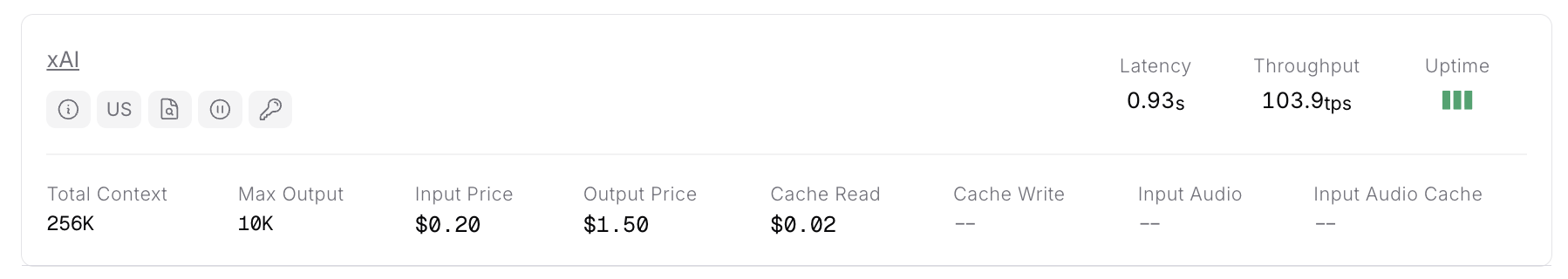

Source: OpenRouter

The launch was a bit shaky, with only Moonshot AI offering hosting for the model currently at reasonable uptime. Some US providers are trying to set it up, but with such a large model it will take a while before a stable enough service is established.

Source: OpenRouter

More importantly, we have to note that the early adopters for new model launches outside of the primary chat surface that the lab offers (i.e., ChatGPT) are predominantly developers for coding purposes. This is where Kimi K2 faces a number of challenges, since benchmarks rarely translate into improved outcomes for coding purposes. If we compare with xAI and Grok 4 Code Fast 1:

Source: OpenRouter

The xAI model runs at 3x the tokens per second speed, is hosted in the US by a trusted entity, and costs 70% less than Kimi K2 Thinking to run the Artificial Analysis benchmarks.

Source: OpenRouter

Not surprisingly, the total usage of Kimi K2 Thinking would barely reach the usage of the #5 largest consumer of Grok Code Fast 1 on OpenRouter. So, if competing on coding is not ideal, then essentially Kimi K2's reasoning model's best odds are as agentic inference for AI roleplay/gaming (where the preference is for very fast and cheap models) and scientific work (nobody will buy inference directly from them for it).

The release of Kimi K2 Thinking has been hyped on X as a "DeepSeek moment", but this looks like a reactionary take. DeepSeek benefited from being one of the first times that the general public was introduced to reasoning models and was easy to self-host due to a variety of smaller distillations. At 1T parameter size, hosting this on recent NVIDIA hardware requires $500k+ server equipment.

All of this is not meant to take away from the achievements demonstrated by Moonshot AI. It's clear that with this release, China (and Alibaba Cloud specifically) is now able to host a frontier-level intelligence model, which will complement the significant progress made on coding models such as Qwen 3/MiniMax 2/GLM 4.6.

If you want to try it out, Kimi is the Moonshot-hosted platform and for chat purposes, applications such as T3 Chat are hosting early new releases such as this one (although it's an American company, they would still be getting their API access via the Moonshot hosting on OpenRouter, so don't enter company confidential information).