The Infra Play #115: The Alibaba vision

As covered this week in "Why behind AI”, while the LLM models most used today for commercial purposes and rank highest in terms of benchmarks and outcomes are all from frontier labs (OpenAI, Anthropic, DeepMind, and xAI), the world of open-source AI is almost completely dominated by Chinese companies.

Source: State of AI 2025

While the most recognizable name in the West today is DeepSeek, the reality is that Alibaba's Tongyi Qianwen (Chinese: 通义千问), also known as Qwen, has become the dominant family of open-source models being adopted globally.

In a recent speech, Wu Yongming, the CEO of Alibaba, announced a $52B investment over the next three years to continue scaling new training runs and servicing inference. This is the same amount of funding that xAI and Anthropic have raised combined since their inception. To put it mildly, Alibaba is uniquely positioned to capture demand from non-Western companies and to drive innovation through the hundreds of thousands of researchers and smaller companies that use their open-source models to build on top of them.

The key takeaway

For tech sales and industry operators: Most CROs and CEOs are still pitching global TAMs as if Western API-powered applications will penetrate APAC the way they've scaled in North America and Europe (they won't). Qwen's 600M downloads and 170K derivative models represent distribution that most Western companies will never achieve in markets where data sovereignty and cost matter more than cutting edge benchmarks. If your company is investing significant resources to enter markets currently dominated by Alibaba Cloud, you need to evaluate whether leadership understands that geopolitical boundaries now define addressable markets, not just feature comparisons. More concerning, homegrown solutions powered by Chinese open-source models are catching up across use cases where "good enough" beats "cutting edge." With $52B being deployed over three years to close that gap, your competitive advantage has a shelf life you should be measuring in quarters, not years.

For investors and founders: Most likely we are seeing the creation of a permanent duopoly when it comes to cloud infrastructure software, with both the US and China forming a network of state-supported players that drive the majority of infrastructure and software creation. The key player in China has emerged as Alibaba, which currently offers the most comprehensive and best-performing open-source models on the market, while financing a number of key "competitors." The next three years will see massive capital investment ($52B; more funding than xAI and Anthropic combined) in order to cement these early gains into a durable advantage. It's clear that Alibaba leadership is already eyeing a post-AGI world, but there is a question of whether their approach to it, based on ecosystem advantages, is the right one. It's entirely possible that the Western frontier labs continue to maintain an advantage and can get to AGI with a single key breakthrough from one of the top four players. If AGI is not actually feasible, the current investment is still likely to help establish them as the dominant Eastern hyperscaler, particularly when we consider that they are running the business on a long-term horizon and understand that AI is about speed to dominance, not haggling over gross margins for next quarter.

Scaling Alibaba Cloud

A world-changing, AI-driven intelligent revolution has just begun. For centuries, the industrial revolution amplified human physical strength through mechanization, and the information revolution amplified our information-processing capability through digitization. This time, the intelligent revolution will exceed our imagination. AGI will not only amplify human intellect but also liberate human potential, paving the way for ASI.

Over the past three years, we’ve clearly felt the speed: in just a few years, AI’s intelligence rose from a high-school level to a PhD level and can even win IMO gold medals. AI chatbots reached users faster than any technology in history. AI’s industry penetration is outpacing all prior technologies. Token consumption is doubling every two to three months. In the past year, global AI investment has surpassed $400 billion; over the next five years, cumulative AI investment will exceed $4 trillion—the largest compute and R&D investment in history—inevitably accelerating stronger models and faster application penetration.

Achieving AGI—a system with human-like general cognitive ability—now appears inevitable. But AGI is not the endpoint; it is a new starting line. AI will not stop at AGI; it will move toward ASI, which surpasses human intelligence and can self-iterate.

Choosing to start the speech with a focus on AGI rather than talking about adoption rates of Alibaba's products sets up a very specific tone. The vision of Alibaba is not limited to its own commercial ambitions, but is seen as a stepping stone in the race to AGI.

The goal of AGI is to free humans from 80% of routine work so we can focus on creation and exploration. ASI, as a system that fully surpasses human intelligence, could create “super scientists” and “full-stack super engineers.” At unimaginable speed, ASI could tackle unsolved scientific and engineering problems—medical breakthroughs, new materials, sustainable energy and climate solutions, even interstellar travel—driving exponential technological leaps into an unprecedented intelligent era.

It's important to calibrate the context of this statement. One of the biggest challenges for China is seen as the innovation gap. US companies still dominate the "zero to one" innovation pipeline, while the Chinese industry has been able to perfect scaling "one to infinity." In recent years, this can be seen in the quickly widening gap in infrastructure buildouts and the significant advancements in high-capacity batteries and solar panels across a variety of applications. China can also be seen as a "nation of engineers," as the majority of high-profile individuals in both politics and business have engineering backgrounds or have spent most of their time in roles that require such knowledge.

So the pitch here has less to do with the "world of plenty," which we often see in Western commentary, but rather with AGI and ASI as a lever to drive an innovative mindset across the nation.

Our view of the three stages toward ASI:

Intelligence Emergence — “Learn from humans.”

Decades of internet development digitized nearly all human knowledge. Language carries this information—the complete corpus of human knowledge. Based on this, large models first learned to understand that corpus, gained generalized intelligence, and exhibited general conversation and intent understanding, and are developing multi-step reasoning. AI is now approaching top human scores across disciplines (e.g., IMO gold level), making it increasingly capable of entering the real world to solve real problems and create real value.Autonomous Action — “Assist humans.” (our current stage)

AI is no longer confined to language; it can act in the real world. Given human goals, AI can break down complex tasks, use and build tools, and autonomously interact with digital and physical worlds, producing major real-world impact.

The key leap is Tool Use—connecting models to all digital tools to execute real-world tasks. Just as human evolution accelerated with tool creation and use, large models now have tool-use capability. Through tool use, AI can call software, APIs, and devices to accomplish complex tasks.A second key is coding ability. Stronger coding enables AI to solve more complex problems and digitize more scenarios. Today’s agents are early, handling mostly standardized, short-cycle tasks. To tackle complex, long-cycle work, coding is crucial: an agent that can code can, in principle, solve arbitrarily complex problems—like an engineering team—by understanding requirements, then autonomously coding and testing. Developing coding ability is a must on the road to AGI.

In the future, natural language will be the source code of the AI era. Anyone can create their own agent via natural language. You state needs in your native tongue; AI writes logic, calls tools, builds systems, and completes nearly all digital work, while operating physical devices through digital interfaces. There may be more agents and robots than people, working alongside us and impacting the real world. Along the way, AI will connect to most real-world scenarios and data, laying the groundwork for future evolution.

Similar to the Western frontier labs, coding is seen as one of the keys to unlocking impactful AI.

3. Self-Iteration — “Surpass humans.”

Two key elements:(a) AI connects to full, raw data from the physical world.

AI progresses fastest today in content creation, math, and coding, where knowledge is 100% human-defined and encoded in text—so AI can fully understand the raw data. In other fields and the broader physical world, AI mostly sees human-summarized knowledge, lacking broad, raw, interactive data. To make breakthroughs beyond humans, AI needs direct, comprehensive raw data from the physical world.Example: a carmaker CEO decides next year’s product via surveys and internal debates—second-hand data. If AI could access all raw data about a car, its next design could far exceed what brainstorming produces. Early rule-based approaches in autonomous driving also fell short; the new wave uses end-to-end training from raw camera data to achieve higher capability. Merely teaching AI our summarized rules isn’t enough; only by letting AI continually interact with the real world—gathering more complete, truer, real-time data—can it model the world, discover deeper patterns beyond human cognition, and create stronger intelligence.

(b) Self-learning.

As AI penetrates more physical-world scenarios and understands more real-world data, models and agents will grow stronger, with the chance to build their own training infra, optimize data pipelines, and update model architectures—achieving self-learning.Future models will continuously interact with the real world, acquire new data, receive real-time feedback, and—via reinforcement and continual learning—self-optimize, correct bias, and iterate. Every interaction is a mini-fine-tune; every feedback is a parameter update. After countless loops of execution and feedback, AI will self-iterate into intelligence that surpasses humans, forming an early ASI.

Once a certain singularity is crossed, it’s as if society hits the accelerator—technological progress outpaces our imagination, and a burst of new productivity ushers in a new stage for humanity. The path to ASI is becoming clearer before our eyes. As AI evolves and industry demand explodes, AI will also bring a massive transformation to the IT industry.

Continual learning and real-world AI feedback are seen as critical to achieving better-performing models. This is similar to what a lot of key researchers in the West see as the challenge of building a "world model" (and we should note that a significant percentage of those were either born in China or of Chinese descent). This is very technical, but important to frame the context:

One view of intelligence is that it is simply the ability to solve a great variety of problems. Under this pragmatic definition, LLMs would clearly count as intelligent. But even if we grant that LLMs demonstrate some form of intelligence, we’re left with another question: In performing as well as they do, do they reason? Do they represent the structure of the world in a robust way? How, precisely, do they obtain their output? We don’t know.

To understand why we don’t know, we have to distinguish the model’s architecture—the way a researcher sets up the blank model before training it—from its training objective—which, in pretraining, is to successfully predict the next token in a document—and its internal representations—the concepts, algorithms, features, and heuristics learned during training. Training encodes these representations in the final values of the weights, which dictate its outputs.

We understand an LLM’s architecture and objective just fine, but these learned and massively distributed representations remain unclear. Does an LLM reason in some strict sense, or does it merely skillfully exploit masses of statistical associations? Relatedly, does it have a world model—a stable, self-consistent map of reality it manipulates to answer questions?

Opinions differ. The skeptical hypothesis is that the models are memorizing answers or pattern-matching chains of words—essentially, babbling. But we know that they can reason.We also know that they memorize training data, as revealed by the collapse in performance when tested on newly collected data.

More recent evaluations aim to test this reasoning gap, or the difference between the model’s ability to solve new problems and simply recalling or generalizing from old answers.

Patel, Dwarkesh. The Scaling Era: An Oral History of AI, 2019–2025 (pp. 68-69).

We don't actually know whether the current vision on how we can scale to AGI would work. We know that throwing compute at it still works, and we are hoping that throwing data at it would help, but that's not a guarantee. One of the most interesting challenges of AI becoming essentially bipolar, with the USA and China sponsoring and supporting their own preferred companies, is that this leads to a risk where one of the sides decides to pursue a different path for training new models that goes nowhere. This is something that occurred in Japan during the personal computer revolution, as local companies would focus on essentially building custom software and hardware for the local market that over time became hopelessly outdated, while the rest of the world adopted common standards and technology.

Our first judgment: Large models are the next-generation operating system.

The platform represented by large models will replace today’s OS status to become the OS of the AI era. Nearly all real-world tool interfaces will connect to large models; user needs and industry applications will be executed via model-linked tools. The LLM will be the orchestration middle layer for users, software, and AI compute resources—the OS of the AI era.

Analogies: Natural language is the new programming language; agents are the new software; context is the new memory; models connect to tools and agents via interfaces like MCP (akin to the system bus in the PC era); A2A protocols enable multi-agent collaboration (like APIs among software).

What most don't understand about AI (and proudly call it a bubble) is that there is a big difference between what happened in the Dot Com era and what's going on today. The internet scaling was a question of network effects and the financial bubble was tied to companies raising significant funding on the premise of having captured a certain percentage of active users. Where things went wrong was that user adoption was simply not that high and there were structural infrastructure issues that prevented further growth. It took another 15 years until fiber, 5G, and mobile were able to scale the network.

AI doesn't have a network problem today, as demonstrated by ChatGPT having 800M users. AI is very much a new software layer that either complements existing functionality or completely replaces it. As such, it's a predictive operating system rather than a declarative one. Another benefit is that it doesn't need to replace the existing software platforms yet and can coexist long term.

Large models will “devour” software.

As the next-gen OS, large models will let anyone use natural language to create unlimited applications. Much software that interacts with the compute world may become model-generated agents, not today’s commercial software. The developer base could expand from tens of millions to hundreds of millions. Previously, due to development costs, only a small set of high-value scenarios became commercial software; in the future, end users will satisfy their needs through large-model tools.

My main thesis behind AI being inevitable is that users just want to tell the thing to do the thing. The need to learn complex interfaces, the majority of them very poorly optimized from a user experience perspective, has always been a core weakness of interacting with the application layer. The technical minimum knowledge required to be able to create applications severely limited what software actually got created. No-code/low-code applications were an effort that tried to bridge the gap, but they have a fundamental flaw: they are still being limited by whatever capabilities the creator of the software was able to produce. By giving a developer in everybody's pocket, this limitation disappears.

Deployment will diversify—the model will run on all devices.

Today’s mainstream “call an API to use a model” is just an early, crude stage (like timesharing on a mainframe). It can’t solve data persistence, lacks long-term memory, real-time capability, privacy, and malleability. In the future, models will run across all compute devices, with persistent memory, edge-cloud synergy, and even on-the-fly parameter updates and self-iteration—like today’s OS running in all environments.

This is a tricky one since obviously it's in their interest to promote their strategy of releasing multiple models in different sizes suitable for a variety of devices and use cases. While I agree with the strategy long term, I also do not use open-source models in my daily work because accessing best-in-class models is trivial and practically speaking cheaper. There will be a moment where the performance of an open-source model will have significantly superior performance to what's available today from the frontier labs and it will be able to run on a smartphone. This moment is not today, and as an early adopter, I would rather always use the cutting edge.

Our strategic choice: Tongyi Qianwen chooses open—to build the Android of the AI era.

We believe open-source models will create greater value and penetrate more scenarios than closed ones. We resolutely choose open source to fully support the developer ecosystem and explore AI’s possibilities with developers worldwide.

"We want to be the Android of AI" is an interesting choice. The actual power usage is happening on Linux, and would be a better analogy. The choice of words here is either based on local market perceptions (since all Chinese manufacturers build on top of Android) or signals that most of the focus will remain on practical, smaller implementations at scale.

Our second judgment: The Super AI Cloud is the next-generation computer.

Large models—the new OS—run on AI Cloud to meet anyone’s needs. Everyone may have dozens to hundreds of agents working 24/7, requiring massive compute.

Inside data centers, the compute paradigm is shifting from CPU-centric to GPU-centric AI compute—demanding denser compute, more efficient networks, and larger clusters. This requires abundant energy, full-stack technology, and millions of GPUs/CPUs, with networks, chips, storage, and databases working efficiently—operating 24/7 to meet global demand. Only super AI clouds with ultra-scale infrastructure and full-stack capabilities can carry such demand. In the future, there may be only 5–6 super cloud platforms worldwide.In this new era, AI will replace energy as the most important commodity, driving daily work across industries. Most AI capabilities will be generated and delivered over cloud networks as tokens. Tokens are the electricity of the future.

In this era, Alibaba Cloud positions itself as a full-stack AI service provider. Tongyi Qianwen has open-sourced 300+ models, covering all modalities and sizes; to date, it has 600 million+ downloads, with 170,000+ derivative models, forming the world’s leading open-source model matrix, arguably the most widely deployed across devices.

Now the tone suddenly changes, moving towards the core of their most important business: Alibaba Cloud, the most important hyperscaler in the East.

Source: Alibaba June’25 Earnings report

Alibaba Cloud operates at a $19B run rate, which makes it the fourth largest cloud provider after AWS ($124B), Azure ($75B), and GCP ($50B). A 25% growth rate is good but not sufficient for them to actually catch up with the big three if things continue as they are.

Similar to the other three, cloud is also not the only game in town for these conglomerates. Alibaba Cloud actually makes up around 14% of the revenue for the group, which would make it actually closest to the positioning of GCP within Google, rather than the much higher importance that AWS and Azure have for their core companies (40% percentile).

The question of course is: what is the future of Alibaba Cloud if we can get to AGI?

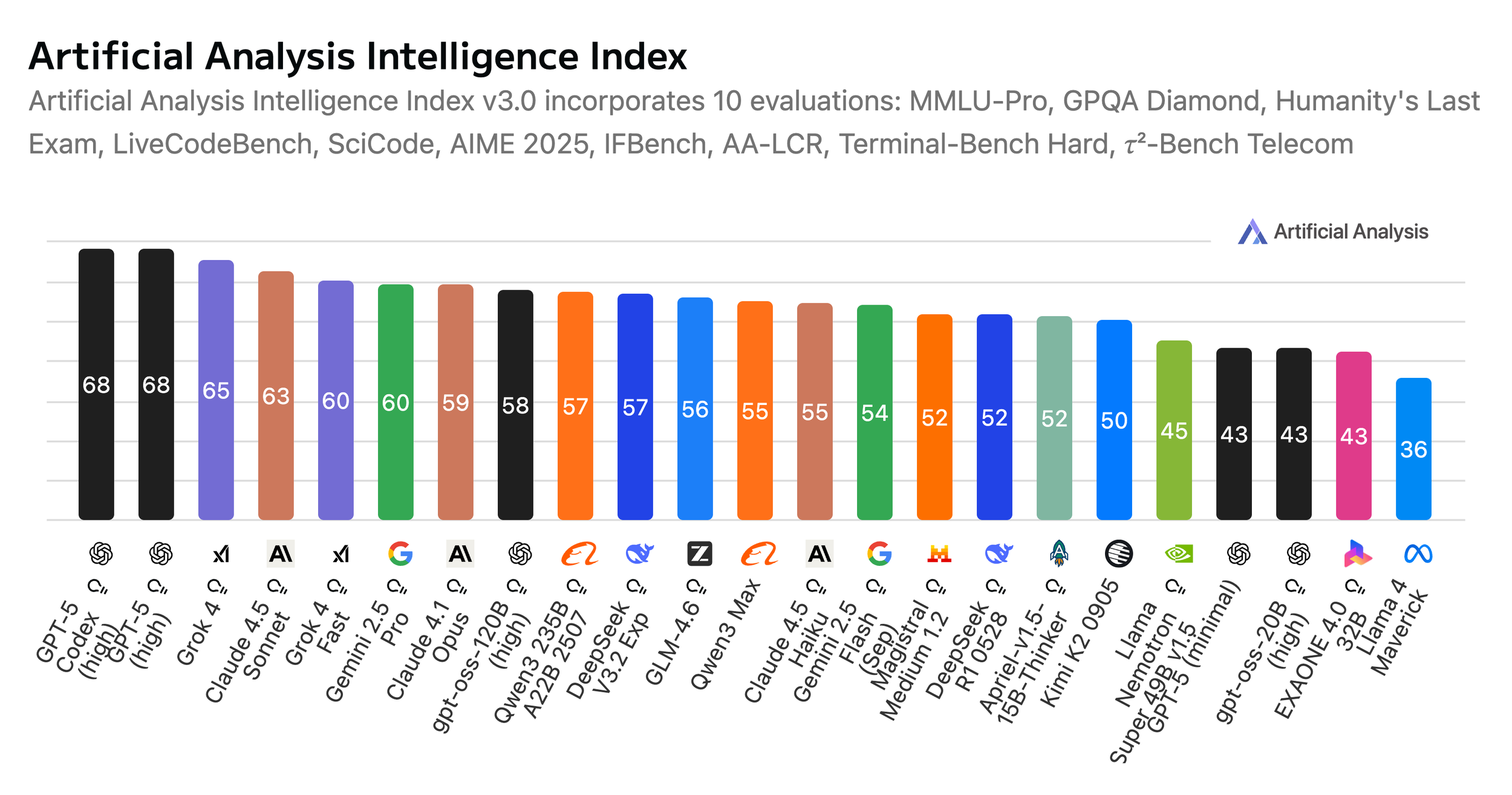

Source: Artificial Analysis

Alibaba Cloud is building a new AI supercomputer—combining leading AI infrastructure with leading models—to co-innovate across infrastructure and model architectures, ensuring maximum efficiency when calling or training large models on Alibaba Cloud and making it the best AI cloud for developers.

The AI industry is moving faster than expected, and demand for AI infrastructure is beyond expectations. We are actively advancing a three-year $52B AI infrastructure plan and will continue to increase investment. Looking at long-term industry development and customer needs, and to welcome the ASI era, by 2032 (vs 2022, GenAI’s first year) Alibaba Cloud’s global data-center energy consumption will rise 10×. We believe such saturation-level investment will propel AI’s development and usher in the ASI era.

Expanding their compute is critical here because of the need to catch up in both performance and efficiency.

Source: Artificial Analysis

Does being behind the frontier labs matter for their core market in China? So far, their edge is both in terms of compute as well as multi-lingual capabilities and a strong focus on coding. These make the Qwen family of models useful across a variety of applications. The DeepSeek team seems more focused on research and experimentation (suitable for their background in physics and finance), while the new players like GLM and Kimi K2 are, well, literally funded by Alibaba. Other big players in China are also moving in (Baidu, Tencent, and Huawei), but so far their performance seems to mimic that of Apple's and Microsoft's homegrown efforts in terms of limited impact.

Human–AI collaboration in the ASI era

As AI grows stronger—even surpassing humans—how will we coexist? We are optimistic. After ASI arrives, humans and AI will collaborate in a new way. Programmers have had a preview: we issue instructions, and with coding tools, overnight an AI can build the system we need—an early glimpse of future collaboration. From “Vibe Coding” to “Vibe Working.” In the future, every household, factory, and company will have numerous agents and robots working around the clock. Perhaps each person will “use” 100 GPUs working on our behalf.Just as electricity once multiplied the leverage of human physical power, ASI will exponentially multiply intellectual leverage. What once took 10 hours may yield output multiplied tenfold or a hundredfold. History shows every technological revolution unlocks productivity and creates new demand. Humans will become more capable than ever.

Is everybody in China (and globally) excited about the bright new future? Sentiment seems mixed.

Source: Comments under the cls.cn article on the speech; translated from Chineese.

Finally: This is only the beginning. AI will restructure the entire infrastructure, software, and application stack, become the core driving force of the real world, and trigger a new wave of intelligent transformation.

Directionally correct. Now let’s see who can execute faster to the prize.