Why behind AI: The new (OpenAI) deal

Today, 28th of October 2025, we got a surprising outcome of the tense negotiations between OpenAI and Microsoft. As per my previous coverage on this:

The relationship between Microsoft and OpenAI remains one of the trickiest challenges to navigate. The terms and conditions of their agreement were a big win for OpenAI back in 2019, essentially getting a preferred-partner lane with access to massive amounts of compute and the distribution power of Azure. In return, they would owe 20% of their revenue to Microsoft until 2030 (or if AGI is announced first), while also giving all of their IP for free to Microsoft to license and distribute.

Today, this deal looks very rough. Sam Altman is trying to pivot away from it, by both turning OpenAI into a commercial organization rather than a non-profit, while flirting with "announcing AGI as achieved." He has also spent significant amounts of time cutting deals with a variety of other players, Oracle and SoftBank being the most notable.

The negotiation with Microsoft has already led to delays on certain plans by OpenAI, such as the acquisition of Windsurf. They ended up backing out of the deal because the IP for Windsurf would end up being also owned by Microsoft, who literally develops VS Code, the editor that Cursor and Windsurf were forked from.

It's clear that GPT-5 is an attempt to put efficiency and outcome-based pricing at the forefront of the product. This is needed both because it improves their hand in case things get more contentious with Microsoft, but also because it should give them a little more breathing room when 20% of that revenue is going to Microsoft, essentially killing any chance of a positive margin.

On the product side they need to achieve 3 outcomes:

Towards (pro)consumers: Improve average revenue per customer and reduce the cost of subsidizing free usage. The best way to achieve this is to minimize unproductive usage (essentially the companion aspect of 4o usage), try and push as many queries as possible to lower compute configurations without significant penalties on retention, and push paying users into the Pro tier. GPT-5 is clearly aimed in this direction, particularly due to significantly reducing the Plus subscription benefits. Both the amount of messages and context window on Plus have been reduced to the point where it's no longer useful as a primary subscription for heavy users. When you also account that the best performance is with GPT-5 Pro, the need for the highest subscription is obvious.

Towards developers: Developers predominantly need to use the API and will do so through application layers with quality of life improvements. There is a reason why the Cursor team was positioned quite heavily in the presentation. The problem here is that Claude Code appears to be strongly preferred as a primary tool by developers for agentic workflows (the most token-consuming ones). Hence what looks like a joint play with Cursor to offer GPT-5 as the best model for the newly launched Cursor CLI agent (free usage the first days of the launch). Whether this will be a successful strategy is yet to be seen, but Anthropic is on pace to reach 40% of OpenAI's revenue this year thanks to dominating with developers and this use case is too important to play catch-up.

Towards businesses/Enterprise: This is a highly awkward product currently. Revenue in the range of $1B is negligible at their size, and Microsoft offers essentially an equivalent product in terms of average outcomes through Copilot for Business. The most confusing part is that Enterprise usage doesn't include the Pro model and the increased limits on Deep Research, both of which are the essential killer apps for pro users. Google pulled a similar confusing feat by launching an Ultra plan that's not available for businesses and includes their Deep Thinking mode, which is highly competitive. If GPT-5 is meant to improve outcomes in this direction, it's difficult to see how.

More importantly, for OpenAI to "escape the Microsoft death grip" and establish itself as the most valuable company long-term in tech, it needs to win in all 3 directions. The potential for this lies with launching their own browser and productivity applications. I spoke about this last week when discussing Google, who are arguably the biggest competitor today to OpenAI.

This is particularly important due to the business realities of models:

1. Research lab trains a model and spends Y dollars on it last year.

2. This year the product and sales teams monetize the model across the estate for hopefully Y + profit.

3. In parallel, the research lab is training a new model this year, which likely cost 2x or 3x Y.

4. Next year, the current model will be retired (completing its revenue added-value) and the new model needs to monetize at least its training cost + profit.

Where research labs need to get to in order to be profitable is a situation where they can monetize models for longer before they need to spend massive R&D costs on the next model. Most importantly, if they do spend a lot of R&D, then the updated model also needs to be better in order to recoup the investment.

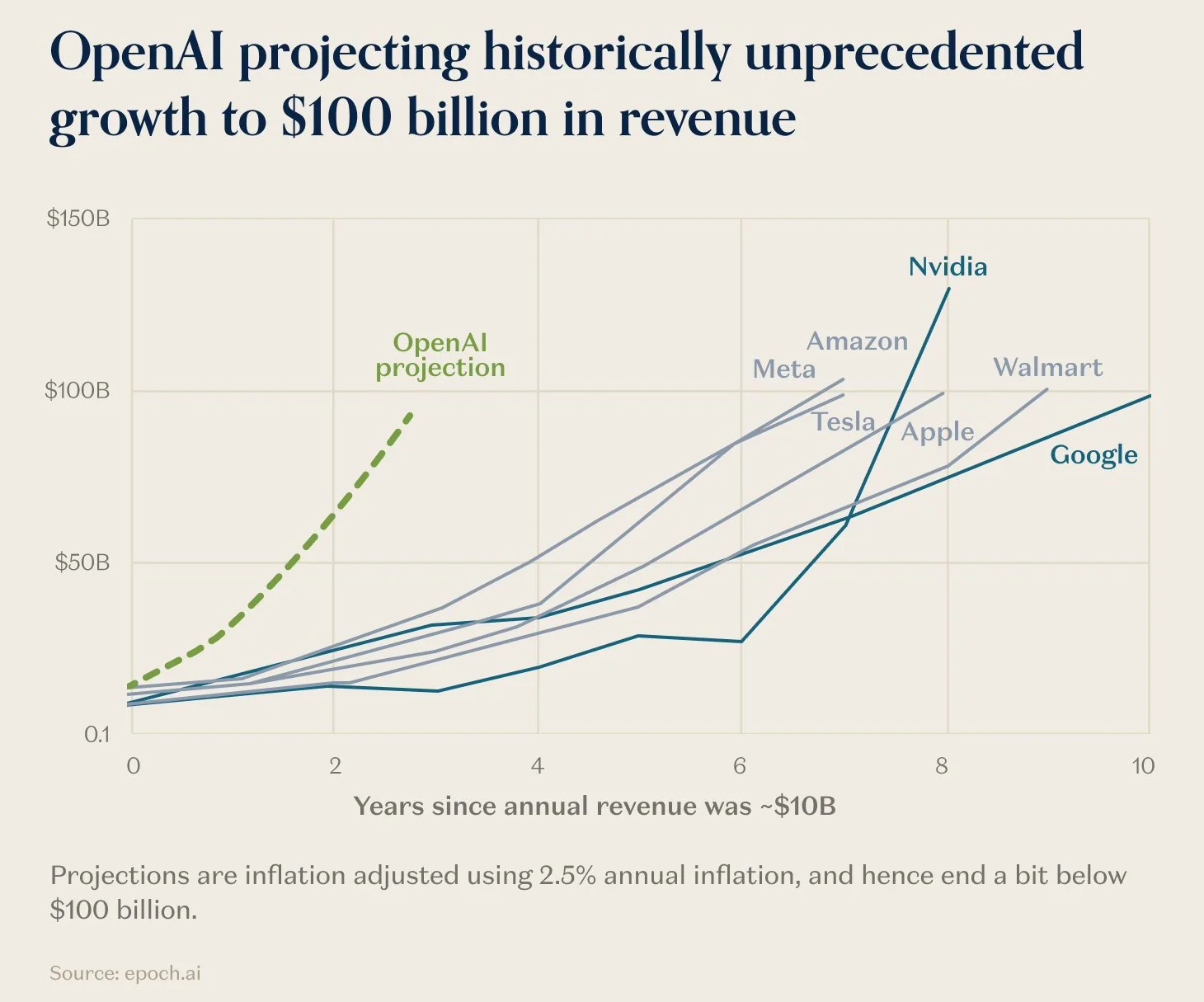

It should be obvious that the stakes here are existential for OpenAI. The contract that they have with Microsoft is simply not suitable for the organization to pursue the opportunity that it promised investors. It should be obvious that the recent funding rounds were based on the premise of what the potential growth of OpenAI, including API inference, could be. As per my X post:

Since most didn't understand this part - currently OpenAI's official revenue is 70% B2C and 30% B2B as reported by the FT. The total run rate is $13B for this fiscal year. The problem with those figures is that they ignore the obvious revenue generated by OpenAI models for Azure inference (and we are not touching the Copilot value prop), which currently earns another $13B ARR. So when investors are evaluating their 3-5 year vision, they are accounting for the Microsoft deal to be over and for OpenAI to capture most of that API inference revenue. OpenAI's valuations only make sense when seen as a for-profit company (potentially public) that has no obligations anymore to Microsoft and owns its models fully. Those contractual obligations end when "AGI is achieved", which we already did, but the business couldn't afford Satya pulling the plug on the compute that he provides as retaliation. So until they have sufficient capacity build-out to service their own inference with Oracle, Broadcom, and NVIDIA, they need to play the game as it is.

Let’s take a look at the terms of the new agreement.

Since 2019, Microsoft and OpenAI have shared a vision to advance artificial intelligence responsibly and make its benefits broadly accessible. What began as an investment in a research organization has grown into one of the most successful partnerships in our industry. As we enter the next phase of this partnership, we’ve signed a new definitive agreement that builds on our foundation, strengthens our partnership, and sets the stage for long-term success for both organizations.

First, Microsoft supports the OpenAI board moving forward with formation of a public benefit corporation (PBC) and recapitalization. Following the recapitalization, Microsoft holds an investment in OpenAI Group PBC valued at approximately $135 billion, representing roughly 27 percent on an as-converted diluted basis, inclusive of all owners—employees, investors, and the OpenAI Foundation. Excluding the impact of OpenAI’s recent funding rounds, Microsoft held a 32.5 percent stake on an as-converted basis in the OpenAI for-profit.

The OpenAI for-profit restructuring is now completed, with OpenAI Group PBC being the new for-profit arm of the company. According to Sam: “the non-profit remains in control and, if we do our jobs well, will be the best-resourced non-profit ever. We are excited to get to work immediately deploying the capital. Our LLC becomes a PBC.”

The agreement preserves key elements that have fueled this successful partnership—meaning OpenAI remains Microsoft’s frontier model partner and Microsoft continues to have exclusive IP rights and Azure API exclusivity until Artificial General Intelligence (AGI).

It also refines and adds new provisions that enable each company to independently continue advancing innovation and growth.

What has evolved:

Once AGI is declared by OpenAI, that declaration will now be verified by an independent expert panel.

What is AGI? According to a recent white-paper that includes Eric Schmidt as a co-author, the definition can be considered as "AGI is an AI that can match or exceed the cognitive versatility and proficiency of a well-educated adult." They explain this in detail:

Source: A Definition of AGI

Currently they rank GPT-5 performance relative to different benchmarks at 57% towards AGI. While there are many different ways to approach this, the proposal outlined here is a reasonable way to think about how an "independent panel" might benchmark this. In practical terms, this means it is likely that any "pronouncement" of AGI might happen in the 2030s, likely after some legal back-and-forth for a few years. Timelines are quite critical here:

Microsoft’s IP rights for both models and products are extended through 2032 and now includes models post-AGI, with appropriate safety guardrails.

Microsoft’s IP rights to research, defined as the confidential methods used in the development of models and systems, will remain until either the expert panel verifies AGI or through 2030, whichever is first. Research IP includes, for example, models intended for internal deployment or research only. Beyond that, research IP does not include model architecture, model weights, inference code, finetuning code, and any IP related to data center hardware and software; and Microsoft retains these non-Research IP rights.

Azure will remain the primary provider of OpenAI inference for business users until at least December 2032. The "include models post-AGI" clause is a bit peculiar; I assume it would imply that they retain IP rights for anything they've already gained access to up until that date, even if AGI is declared earlier.

They will also have first-row access to all of OpenAI's research work until December 2030. This would imply a staged rollout of IP rights and visibility into the product pipeline.

Microsoft’s IP rights now exclude OpenAI’s consumer hardware.

OpenAI can now jointly develop some products with third parties. API products developed with third parties will be exclusive to Azure. Non-API products may be served on any cloud provider.

Big win for Sam and the team, particularly after the Windsurf fiasco. If they are going to play into all the new areas they want to enter, they need to be able to maintain their revenue and not have a "me too" product deployed immediately by Microsoft.

Microsoft can now independently pursue AGI alone or in partnership with third parties.

If Microsoft uses OpenAI’s IP to develop AGI, prior to AGI being declared, the models will be subject to compute thresholds; those thresholds are significantly larger than the size of systems used to train leading models today.

The original clause limiting Microsoft from working with other frontier labs on AGI has been perceived as a negative, and this is now rectified. Whether or not there is realistically compute, capital, and human capacity to work properly with multiple frontier labs simultaneously (or fully independently) is yet to be seen. The consensus seems to be that Mustafa has so far failed in his tenure as the "AI Tsar of Microsoft," particularly when you look at how integral his co-founders Demis Hassabis and Shane Legg from DeepMind are to Google's pursuit of AGI.

The revenue share agreement remains until the expert panel verifies AGI, though payments will be made over a longer period of time.

OpenAI has contracted to purchase an incremental $250B of Azure services, and Microsoft will no longer have a right of first refusal to be OpenAI’s compute provider.

The most practical of all clauses covers the allocation of capital. OpenAI does not have to repay its annual 20% revenue share to Microsoft each year immediately, and $250B of Azure compute is now reserved going forward for OpenAI inference and research. This commitment, if realized, will also go a long way to push the Azure run rate (currently $86 billion) to close the gap with AWS. "Generational run" doesn't really cover it, but it's also still a directional opportunity, as OpenAI needs to actually generate this demand first. Another significant shift here is that Microsoft is no longer guaranteed first request for additional compute capacity, which could also make the whole deal look like a pre-negotiated discount structure that OpenAI might use or not depending on how their own datacenter buildouts are progressing. For Satya, this is probably the riskiest bet out of the whole negotiation, as Microsoft will have to win those workloads based on their sales execution.

OpenAI can now provide API access to US government national security customers, regardless of the cloud provider.

OpenAI is now able to release open weight models that meet requisite capability criteria.

The last two clauses are directly related to the trade-off of pushing for OpenAI to become the most successful tech company in the world while balancing the government vision for AI. As outlined in the USA AI Action Plan, open source models and access to models for government purposes are the cornerstones of the reimagining of the federal government in the age of AI.

Source: a16z substack

The OpenAI board wants to deliver essentially 7.5x the current ARR ($13B) over the next 36 months. While still an extremely challenging goal, this new agreement paves a path for this to be achieved.

Victori spoila.